I have a DRTIO setup with an external 125 MHz clock input, see also https://forum.m-labs.hk/d/599-reference-clock-for-drtio-system. Now, I ran some tests to see if the two crates have their RTIO timestamps synced well.

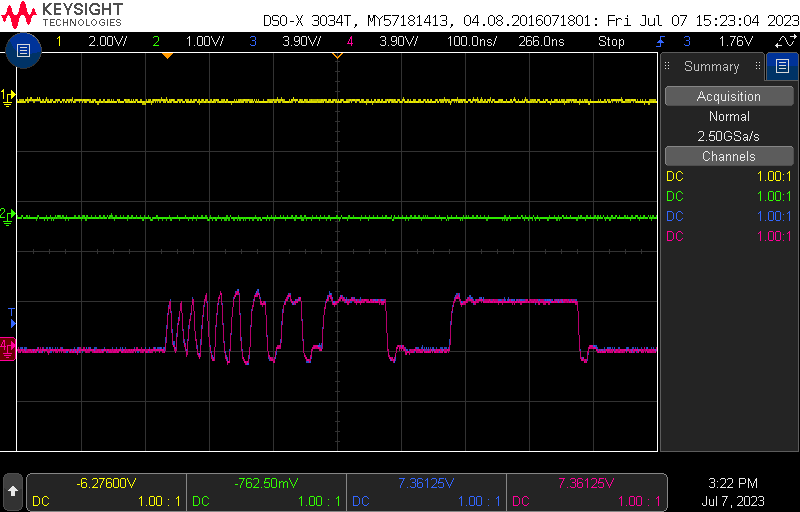

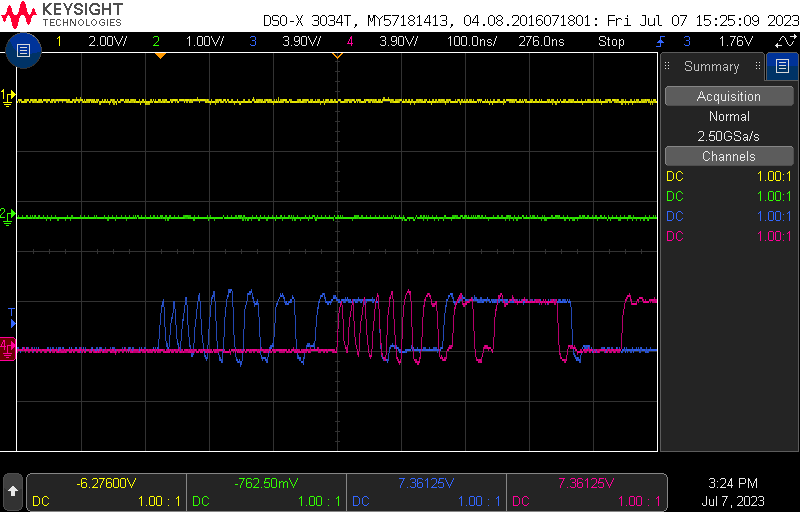

When connecting two TTL outputs from the master to a scope, the pulse train overlaps and the outputs are clearly in sync with nanosecond precision.

For the same experiment with TTL outputs from the master and the satellite, there is a 276 ns latency for the TTL output on the satellite. in the figure, blue is the master.

if I look at the uart logs of the two crates, I see the following

master:

[2023-07-07 14:10:21] [ 0.332466s] INFO(board_artiq::drtio_routing): routing table: RoutingTable { 0: 0; 1: 1 0; }

[2023-07-07 14:10:21] [ 0.344508s] INFO(runtime::mgmt): management interface active

[2023-07-07 14:10:21] [ 0.356530s] INFO(runtime::session): accepting network sessions

[2023-07-07 14:10:21] [ 0.369568s] INFO(runtime::session): running startup kernel

[2023-07-07 14:10:21] [ 0.414358s] INFO(runtime::rtio_mgt::drtio): [DEST#0] destination is up

[2023-07-07 14:10:24] [ 3.227096s] INFO(runtime::rtio_mgt::drtio): [LINK#0] link RX became up, pinging

[2023-07-07 14:10:30] [ 9.116778s] INFO(runtime::rtio_mgt::drtio): [LINK#0] remote replied after 29 packets

[2023-07-07 14:10:30] [ 9.302240s] INFO(runtime::rtio_mgt::drtio): [LINK#0] link initialization completed

[2023-07-07 14:10:30] [ 9.349074s] INFO(runtime::rtio_mgt::drtio): [DEST#1] destination is up

[2023-07-07 14:10:30] [ 9.354518s] INFO(runtime::rtio_mgt::drtio): [DEST#1] buffer space is 128

satellite:

[2023-07-07 14:10:21] [ 0.029856s] INFO(board_misoc::io_expander): MCP23017 io expander 0 not found. Checking for PCA9539.

[2023-07-07 14:10:21] [ 0.058400s] INFO(board_misoc::io_expander): MCP23017 io expander 1 not found. Checking for PCA9539.

[2023-07-07 14:10:22] [ 0.456360s] INFO(board_artiq::si5324): waiting for Si5324 lock...

[2023-07-07 14:10:24] [ 2.401478s] INFO(board_artiq::si5324): ...locked

[2023-07-07 14:10:24] [ 3.033241s] INFO(satman): uplink is up, switching to recovered clock

[2023-07-07 14:10:24] [ 3.066184s] INFO(board_artiq::si5324): waiting for Si5324 lock...

[2023-07-07 14:10:26] [ 4.794828s] INFO(board_artiq::si5324): ...locked

[2023-07-07 14:10:29] [ 8.197790s] INFO(board_artiq::si5324::siphaser): calibration successful, lead: 212, width: 433 (347deg)

[2023-07-07 14:10:30] [ 8.687151s] INFO(satman): TSC loaded from uplink

[2023-07-07 14:10:30] [ 8.816918s] INFO(satman): rank: 1

[2023-07-07 14:10:30] [ 8.819018s] INFO(satman): routing table: RoutingTable { 0: 0; 1: 1 0; }

So it does seem that the master takes the clock from the external 125 MHz and the satellite locks its clock to over DRTIO.

Though there is still this 276 ns latency. All runs on ARTIQ v7.8173.ff97675. @sb10q any idea why that is the case?